About a billion years ago, Bertrand Meyer (he of Open-Closed Principle fame) introduced a programming language called Eiffel. It had a feature called Design by Contract, that let you define constraints that your program had to adhere to in execution. Like you can convince C compilers to emit checks for rules like integer underflow everywhere in your code, except you can write your own rules.

To see what that’s like, here’s a little Objective-C (I suppose I could use Eiffel, as Eiffel Studio is in homebrew, but I didn’t). Here’s my untested, un-contractual Objective-C Stack class.

@interface Stack : NSObject

- (void)push:(id)object;

- (id)pop;

@property (nonatomic, readonly) NSInteger count;

@end

static const int kMaximumStackSize = 4;

@implementation Stack

{

__strong id buffer[4];

NSInteger _count;

}

- (void)push:(id)object

{

buffer[_count++] = object;

}

- (id)pop

{

id object = buffer[--_count];

buffer[_count] = nil;

return object;

}

@end

Seems pretty legit. But I’ll write out the contract, the rules to which this class will adhere provided its users do too. Firstly, some invariants: the count will never go below 0 or above the maximum number of objects. Objective-C doesn’t actually have any syntax for this like Eiffel, so this looks just a little bit messy.

@interface Stack : ContractObject

- (void)push:(id)object;

- (id)pop;

@property (nonatomic, readonly) NSInteger count;

@end

static const int kMaximumStackSize = 4;

@implementation Stack

{

__strong id buffer[4];

NSInteger _count;

}

- (NSDictionary *)contract

{

NSPredicate *countBoundaries = [NSPredicate predicateWithFormat: @"count BETWEEN %@",

@[@0, @(kMaximumStackSize)]];

NSMutableDictionary *contract = [@{@"invariant" : countBoundaries} mutableCopy];

[contract addEntriesFromDictionary:[super contract]];

return contract;

}

- (void)in_push:(id)object

{

buffer[_count++] = object;

}

- (id)in_pop

{

id object = buffer[--_count];

buffer[_count] = nil;

return object;

}

@end

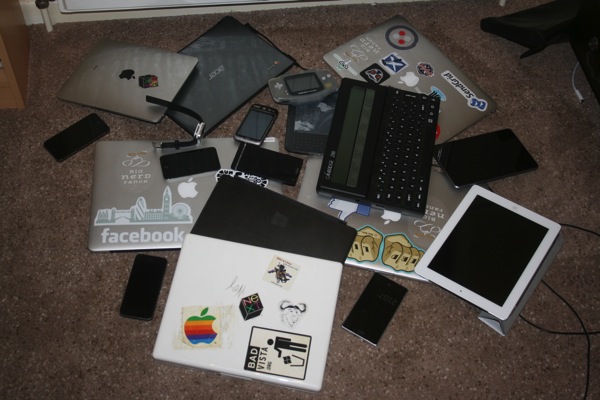

I said the count must never go outside of this range. In fact, the invariant must only hold before and after calls to public methods: it’s allowed to be broken during the execution. If you’re wondering how this interacts with threading: confine ALL the things!. Anyway, let’s see whether the contract is adhered to.

int main(int argc, char *argv[]) {

@autoreleasepool {

Stack *stack = [Stack new];

for (int i = 0; i < 10; i++) {

[stack push:@(i)];

NSLog(@"stack size: %ld", (long)[stack count]);

}

}

}

2014-08-11 22:41:48.074 ContractStack[2295:507] stack size: 1

2014-08-11 22:41:48.076 ContractStack[2295:507] stack size: 2

2014-08-11 22:41:48.076 ContractStack[2295:507] stack size: 3

2014-08-11 22:41:48.076 ContractStack[2295:507] stack size: 4

2014-08-11 22:41:48.076 ContractStack[2295:507] *** Assertion failure in -[Stack forwardInvocation:], ContractStack.m:40

2014-08-11 22:41:48.077 ContractStack[2295:507] *** Terminating app due to uncaught exception 'NSInternalInconsistencyException',

reason: 'invariant count BETWEEN {0, 4} violated after call to push:'

Erm, oops. OK, this looks pretty useful. I’ll add another clause: the caller isn’t allowed to call -pop unless there are objects on the stack.

- (NSDictionary *)contract

{

NSPredicate *countBoundaries = [NSPredicate predicateWithFormat: @"count BETWEEN %@",

@[@0, @(kMaximumStackSize)]];

NSPredicate *containsObjects = [NSPredicate predicateWithFormat: @"count > 0"];

NSMutableDictionary *contract = [@{@"invariant" : countBoundaries,

@"pre_pop" : containsObjects} mutableCopy];

[contract addEntriesFromDictionary:[super contract]];

return contract;

}

So I’m not allowed to hold it wrong in this way, either?

int main(int argc, char *argv[]) {

@autoreleasepool {

Stack *stack = [Stack new];

id foo = [stack pop];

}

}

2014-08-11 22:46:12.473 ContractStack[2386:507] *** Assertion failure in -[Stack forwardInvocation:], ContractStack.m:35

2014-08-11 22:46:12.475 ContractStack[2386:507] *** Terminating app due to uncaught exception 'NSInternalInconsistencyException',

reason: 'precondition count > 0 violated before call to pop'

No, good. Having a contract is a bit like having unit tests, except that the unit tests are always running whenever your object is being used. Try out Eiffel; it’s pleasant to have real syntax for this, though really the Objective-C version isn’t so bad.

Finally, the contract is implemented by some simple message interception (try doing that in your favourite modern programming language of choice, non-Rubyists!).

@interface ContractObject : NSObject

- (NSDictionary *)contract;

@end

static SEL internalSelector(SEL aSelector);

@implementation ContractObject

- (NSDictionary *)contract { return @{}; }

- (NSMethodSignature *)methodSignatureForSelector:(SEL)aSelector

{

NSMethodSignature *sig = [super methodSignatureForSelector:aSelector];

if (!sig) {

sig = [super methodSignatureForSelector:internalSelector(aSelector)];

}

return sig;

}

- (void)forwardInvocation:(NSInvocation *)inv

{

SEL realSelector = internalSelector([inv selector]);

if ([self respondsToSelector:realSelector]) {

NSDictionary *contract = [self contract];

NSPredicate *alwaysTrue = [NSPredicate predicateWithValue:YES];

NSString *calledSelectorName = NSStringFromSelector([inv selector]);

inv.selector = realSelector;

NSPredicate *invariant = contract[@"invariant"]?:alwaysTrue;

NSAssert([invariant evaluateWithObject:self],

@"invariant %@ violated before call to %@", invariant, calledSelectorName);

NSString *preconditionKey = [@"pre_" stringByAppendingString:calledSelectorName];

NSPredicate *precondition = contract[preconditionKey]?:alwaysTrue;

NSAssert([precondition evaluateWithObject:self],

@"precondition %@ violated before call to %@", precondition, calledSelectorName);

[inv invoke];

NSString *postconditionKey = [@"post_" stringByAppendingString:calledSelectorName];

NSPredicate *postcondition = contract[postconditionKey]?:alwaysTrue;

NSAssert([postcondition evaluateWithObject:self],

@"postcondition %@ violated after call to %@", postcondition, calledSelectorName);

NSAssert([invariant evaluateWithObject:self],

@"invariant %@ violated after call to %@", invariant, calledSelectorName);

}

}

@end

SEL internalSelector(SEL aSelector)

{

return NSSelectorFromString([@"in_" stringByAppendingString:NSStringFromSelector(aSelector)]);

}