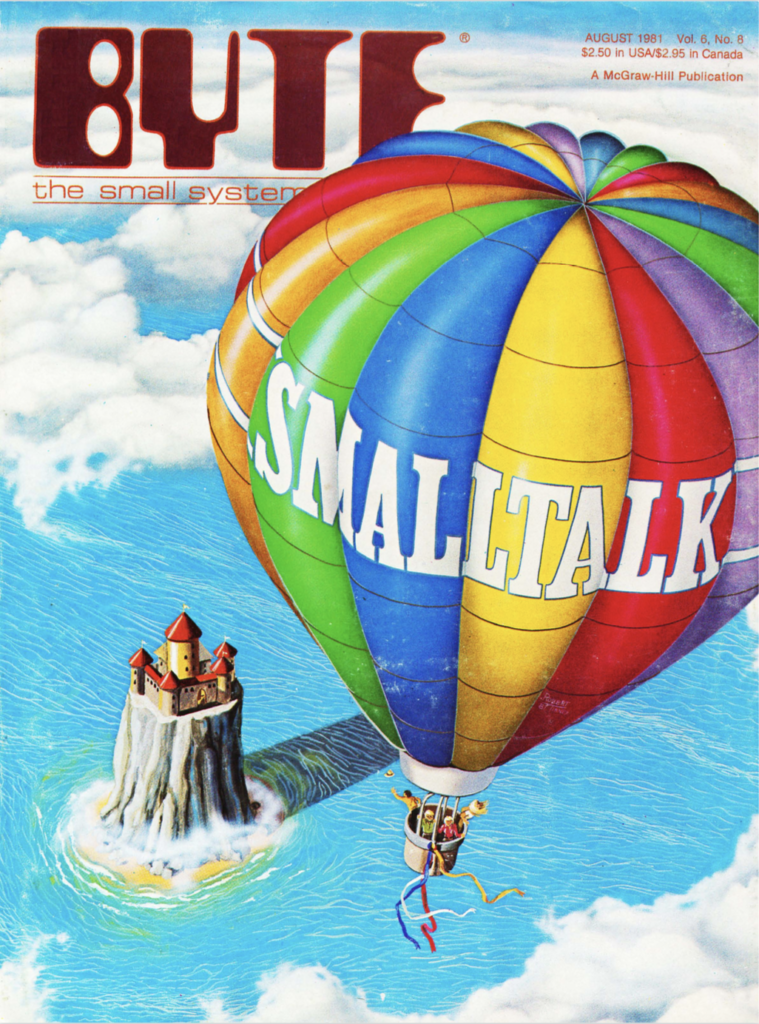

To this day, many Smalltalk projects have a hot air balloon in their logo. These reference the cover of the issue of Byte Magazine in which Smalltalk-80 was shared with the wider programming community.

Modern Smalltalks all have a lot in common with Smalltalk-80. Why? If you compare Smalltalk-72 with Smalltalk-80 there’s a huge amount of evolution. So why does Cincom Smalltalk or Amber Smalltalk or Squeak or even Pharo still look quite a lot like Smalltalk-80?

My answer is because they are used. Actually, Alan’s answer too:

Basically what happened is this vehicle became more and more a programmer’s vehicle and less and less a children’s vehicle—the version that got put out, Smalltalk ’80, I don’t think it was ever programmed by a child. I don’t think it could have been programmed by a child because it had lost some of its amenities, even as it gained pragmatic power.

So the death of Smalltalk in a way came as soon as it got recognized by real programmers as being something useful; they made it into more of their own image, and it started losing its nice end-user features.

I think there are two different things you want from a programming language (well, programming environment, but let’s not split tree trunks). Referencing the ivory tower on the Byte cover, let’s call them “academic” and “industrial”, these two schools.

The industrial ones are out there, being used to solve problems. They need to be stable (some of these problems haven’t changed much in decades), easy to understand (the people have changed), and they don’t need to be exciting, they just need to work. Cobol and Fortran are great in this regard, as is C and to some extent C++: you take code written a bajillion years ago, build it, and it works.

The academic ones are where the new ideas get tried out. They should enable experiment and excitement first, and maybe easy to understand (but if you need to be an expert in the idea you’re trying out, that’s not so bad).

So the industrial and academic languages have conflicting goals. There’s going to be bad feedback set up if we try to achieve both goals in one place:

- the people who have used the language as a tool to solve problems won’t appreciate it if new ideas come along that mean they have to work to get their solution building or running correctly, again.

- the people who have used the language as a tool to explore new ideas won’t appreciate it if backwards compatibility hamstrings the ability to extend in new directions.

Unfortunately at the moment a lot of languages are used for both, which leads to them being mediocre at either. The new “we’ve done C but betterer” languages like Go, Rust etc. feature people wanting to add new features, and people wanting not to have existing stuff broken. Javascript is a mess of transpilation, shims, polyfills, and other words that mean “try to use a language, bearing in mind that nobody agrees how it’s supposed to work”.

Here are some patterns for managing the distinction that have been tried in the past:

- metaprogramming. Lisp in particular is great at having a little language that you can use to solve your problems, and that you can also use to make new languages or make the world work differently to see how that would work. Of course, if you can change the world then you can break the world, and Lisp isn’t great at making it clear that there’s a line between following the rules and writing new ones.

- pragmas. Haskell in particular is great at having a core language that people understand and use to write software, and a zillion flags that enable different features that one person pursued in their PhD that one time. Not all of the flag combinations may be that great, and it might be hard to know which things work well and which worked well enough to get a dissertation out of. But these are basically the “enable academic mode” settings, anyway.

- versions. Perl and Python both ran for years in which version x was the safe, stable, industrial language, and version y (it’s not x+1: Python’s parallel versions were 2 and 3000) in which people could explore extensions, removals, or other changes in potentially breaking ways. At some point, each project got to the point where they were happy with the choices, and declared the new version “ready” and available for industrial use. This involved some translation from version x, which wasn’t necessarily straightforward (though in the case of Python was commonly overblown, so people avoided going from 2 to 3 even when it was easy). People being what they are, they put a lot of store in version numbers. So some people didn’t like that folks were recommending to use x when there was this clearly newer y available.

- FFIs. You can call industrial C89 code (which still works after three decades) from pretty much any academic language you care to invent. If you build a JVM language, it can do what it wants, and still call Java code.

Anyway, I wonder whether that distinction between academic and industrial might be a good one to strengthen. If you make a new programming language project and try to get “users” too soon, you could lose the ability to take the language where you want it to go. And based on the experience of Smalltalk, too soon might be within the first decade.