Many parts of a modern software stack have been around for a long time. That has trade-offs, but in terms of user experience is a great thing: software can be incrementally improved, providing customers with familiarity and stability. No need to learn an entirely new thing, because your existing thing just keeps on working.

It’s also great for developers, because it means we don’t have to play red queen, always running just to stand still. We can focus on improving that customer experience, knowing that everything we wrote to date still works. And it does still work. Cocoa, for example, has a continuous history back to 2001, and there’s code written to use Cocoa APIs going back to 1994. Let’s port some old Cocoa software, to see how little effort it is to stay up to date.

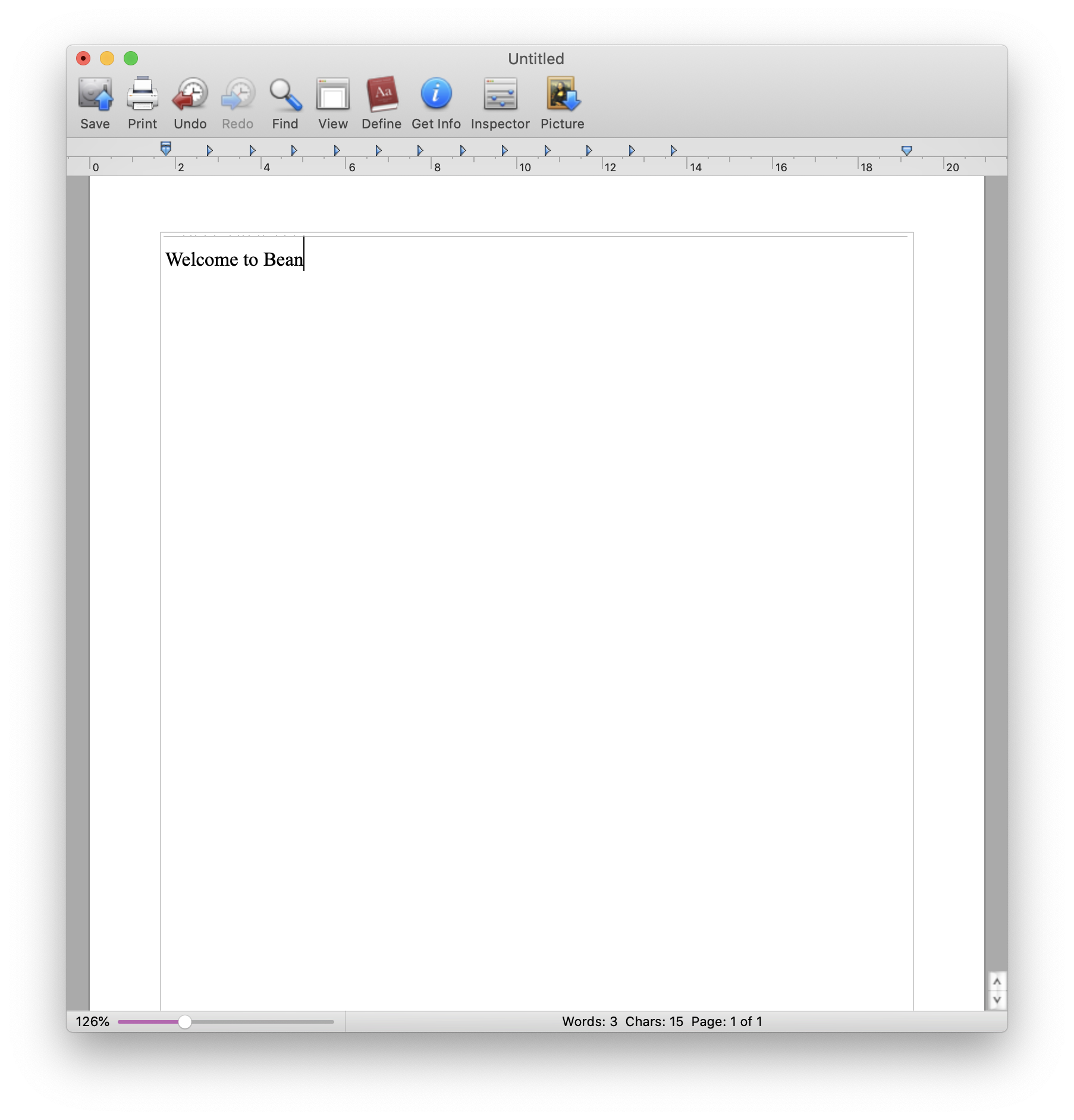

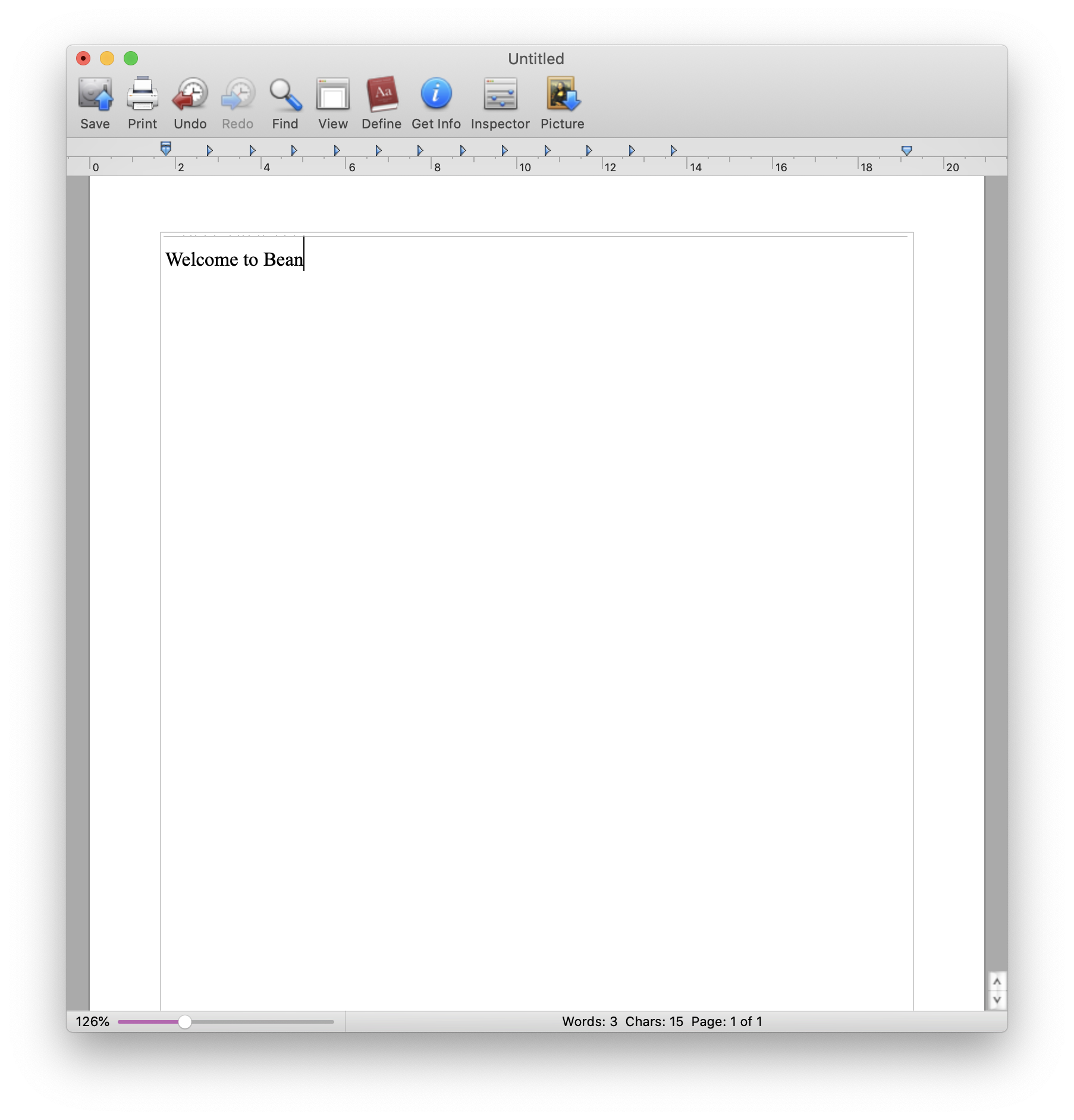

Bean is a free word processor for macOS. It’s written in Objective-C, using mostly Cocoa (but some Carbon) APIs, and uses the Cocoa Text system. The current version, Bean 3.3.0, is free, and supports macOS 10.14-10.15. The open source (GPL2) version, Bean 2.4.5, supports 10.4-10.5 on Intel and PowerPC. What would it take to make that a modern Cocoa app? Not much—a couple of hours work gave me a fully-working Bean 2.4.5 on Catalina. And a lot of that was unnecessary side-questing.

Step 1: Make Xcode happy

Bean 2.4.5 was built using the OS X 10.5 SDK, so probably needed Xcode 3. Xcode 11 doesn’t have the OS X 10.5 SDK, so let’s build with the macOS 10.15 SDK instead. While I was here, I also accepted whatever suggested updated settings Xcode showed. That enabled the -fobjc-weak flag (not using automatic reference counting), which we can now just turn off because the deployment target won’t support it. So now we just build and run, right?

Not quite.

Step 2: Remove references to NeXT Objective-C runtime

Bean uses some “method swizzling” (i.e. swapping method implementations at runtime), mostly to work around differences in API behaviour between Tiger (10.4) and Leopard (10.5). That code no longer compiles:

/Users/leeg/Projects/Bean-2-4-5/ApplicationDelegate.m:66:23: error: incomplete

definition of type 'struct objc_method'

temp1 = orig_method->method_types;

~~~~~~~~~~~^

In file included from /Users/leeg/Projects/Bean-2-4-5/ApplicationDelegate.m:31:

/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX10.15.sdk/usr/include/objc/runtime.h:44:16: note:

forward declaration of 'struct objc_method'

typedef struct objc_method *Method;

^

The reason is that when Apple introduced the Objective-C 2.0 runtime in Leopard, they made it impossible to directly access the data structures used by the runtime. Those structures stayed in the headers for a couple of releases, but they’re long gone now. My first thought (and first fix) was just to delete this code, but I eventually relented and wrapped it in #if !__OBJC2__ so that my project should still build back to 10.4, assuming you update the SDK setting. It now builds cleanly, using clang and Xcode 11.5 (it builds in the beta of Xcode 12 too, in fact).

OK, ship it, right?

Diagnose a stack smash

No, I launched it, but it crashed straight away. The stack trace looks like this:

* thread #1, queue = 'com.apple.main-thread', stop reason = EXC_BAD_ACCESS (code=2, address=0x7ffeef3fffc8)

* frame #0: 0x00000001001ef576 libMainThreadChecker.dylib`checker_c + 49

frame #1: 0x00000001001ee7c4 libMainThreadChecker.dylib`trampoline_c + 67

frame #2: 0x00000001001c66fc libMainThreadChecker.dylib`handler_start + 144

frame #3: 0x00007fff36ac5d36 AppKit`-[NSTextView drawInsertionPointInRect:color:turnedOn:] + 132

frame #4: 0x00007fff36ac5e6d AppKit`-[NSTextView drawInsertionPointInRect:color:turnedOn:] + 443

[...]

frame #40240: 0x00007fff36ac5e6d AppKit`-[NSTextView drawInsertionPointInRect:color:turnedOn:] + 443

frame #40241: 0x00007fff36ac5e6d AppKit`-[NSTextView drawInsertionPointInRect:color:turnedOn:] + 443

frame #40242: 0x00007fff36a6d98c AppKit`-[NSTextView(NSPrivate) _viewDidDrawInLayer:inContext:] + 328

[...]

That’s, um. Well, it’s definitely not good. All of the backtrace is in API code, except for main() at the top. Has NSTextView really changed so much that it gets into an infinite loop when it tries to draw the cursor?

No. Actually one of the many patches to AppKit in this app is not swizzled, it’s a category on NSTextView that replaces the two methods you can see in that stack trace. I could change those into swizzled methods and see if there’s a way to make them work, but for now I’ll remove them.

Side quest: rationalise some version checks

Everything works now. An app that was built for PowerPC Mac OS X and ported at some early point to 32-bit Intel runs, with just a couple of changes, on x86_64 macOS.

I want to fix one more thing. This message appears on launch and I would like to get rid of it:

2020-07-09 21:15:28.032817+0100 Bean[4051:113348] WARNING: The Gestalt selector gestaltSystemVersion

is returning 10.9.5 instead of 10.15.5. This is not a bug in Gestalt -- it is a documented limitation.

Use NSProcessInfo's operatingSystemVersion property to get correct system version number.

Call location:

2020-07-09 21:15:28.033531+0100 Bean[4051:113348] 0 CarbonCore 0x00007fff3aa89f22 ___Gestalt_SystemVersion_block_invoke + 112

2020-07-09 21:15:28.033599+0100 Bean[4051:113348] 1 libdispatch.dylib 0x0000000100362826 _dispatch_client_callout + 8

2020-07-09 21:15:28.033645+0100 Bean[4051:113348] 2 libdispatch.dylib 0x0000000100363f87 _dispatch_once_callout + 87

2020-07-09 21:15:28.033685+0100 Bean[4051:113348] 3 CarbonCore 0x00007fff3aa2bdb8 _Gestalt_SystemVersion + 945

2020-07-09 21:15:28.033725+0100 Bean[4051:113348] 4 CarbonCore 0x00007fff3aa2b9cd Gestalt + 149

2020-07-09 21:15:28.033764+0100 Bean[4051:113348] 5 Bean 0x0000000100015d6f -[JHDocumentController init] + 414

2020-07-09 21:15:28.033802+0100 Bean[4051:113348] 6 AppKit 0x00007fff36877834 -[NSCustomObject nibInstantiate] + 413

A little history, here. Back in classic Mac OS, Gestalt was used like Unix programmers use sysctl and soda drink makers use high fructose corn syrup. Want to expose some information? Add a gestalt! Not bloated enough? Drink more gestalt!

It’s an API that takes a selector, and a pointer to some memory. What gets written to the memory depends on the selector. The gestaltSystemVersion selector makes it write the OS version number to the memory, but not very well. It only uses 32 bits. This turned out to be fine, because Apple didn’t release many operating systems. They used one quartet (i.e. one hexadecimal digit) each for major, minor, and patch release numbers, so macOS 8.5.1 was represented as 0x0851.

When Mac OS X came along, Gestalt was part of the Carbon API, and the versions were reported as if the major release had bumped up to 16: 0x1000 was the first version, 0x1028 was a patch level release 10.2.8 of Jaguar, and so on. So maybe the system version is binary coded decimal.

At some point, someone at Apple realised that if they ever did sixteen patch releases or sixteen minor releases, this would break. So they capped each of the patch/minor numbers at 9, and just told you to stop using gestaltSystemVersion. I would like to stop using it here, too.

There are lots of version number checks all over Bean. I’ve put them all in one place, and given it two ways to check the version: if -[NSProcessInfo isOperatingSystemAtLeastVersion:] is available, we use that. Actually that will never be relevant, because the tests are all for versions between 10.3 and 10.6, and that API was added in 10.10. So we then fall back to Gestalt again, but with the separate gestaltSystemVersionMajor/Minor selectors. These exist back to 10.4, which is perfect: if that fails, you’re on 10.3, which is the earliest OS Bean “runs” on. Actually it tells you it won’t run, and quits: Apple added a minimum-system check to Launch Services so you could use Info.plist to say whether your app works, and that mechanism isn’t supported in 10.3.

Ship it!

We’re done!

Haha, just kidding, we’re not done. Launching the thing isn’t enough, we’d better test it too.

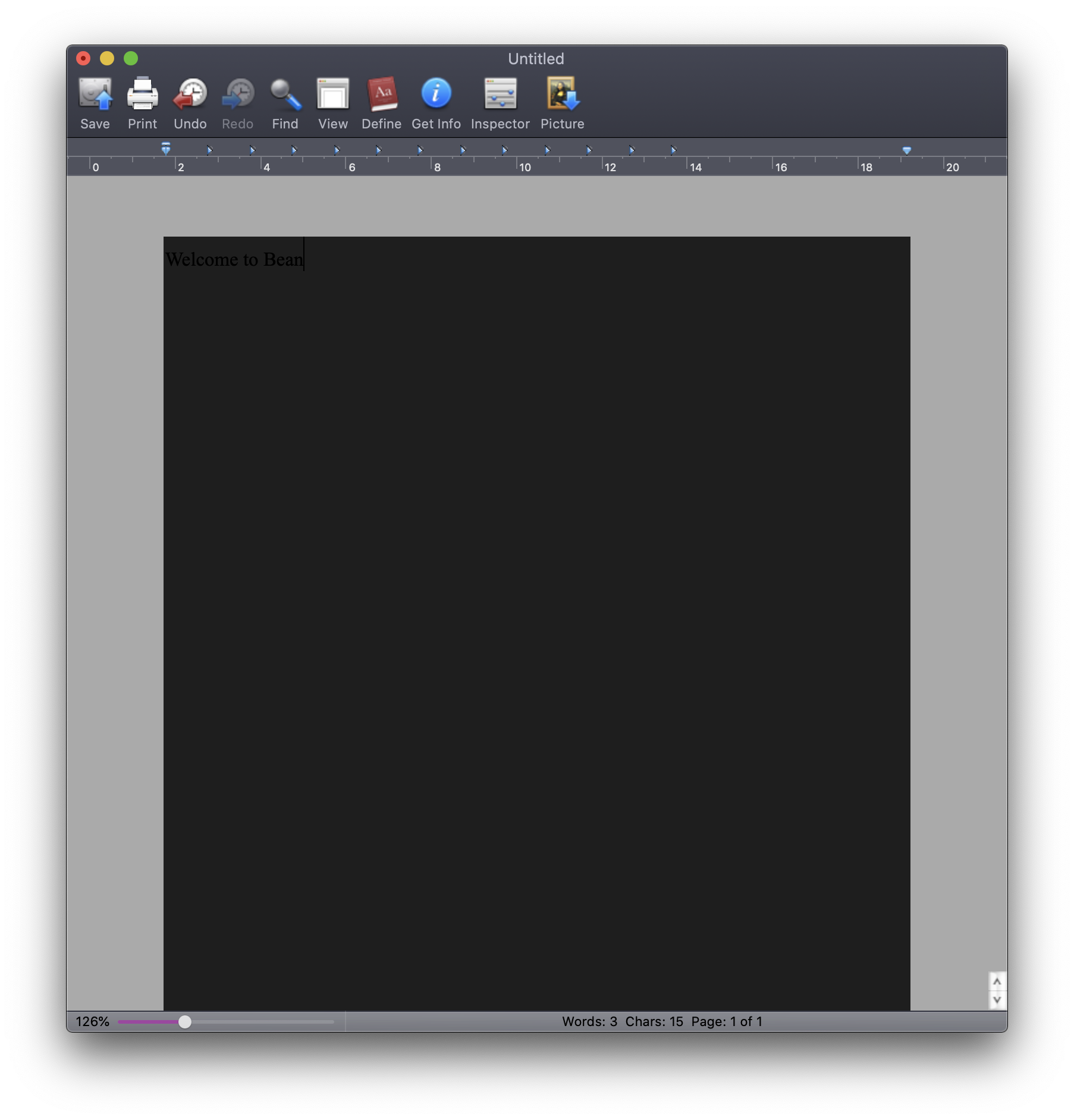

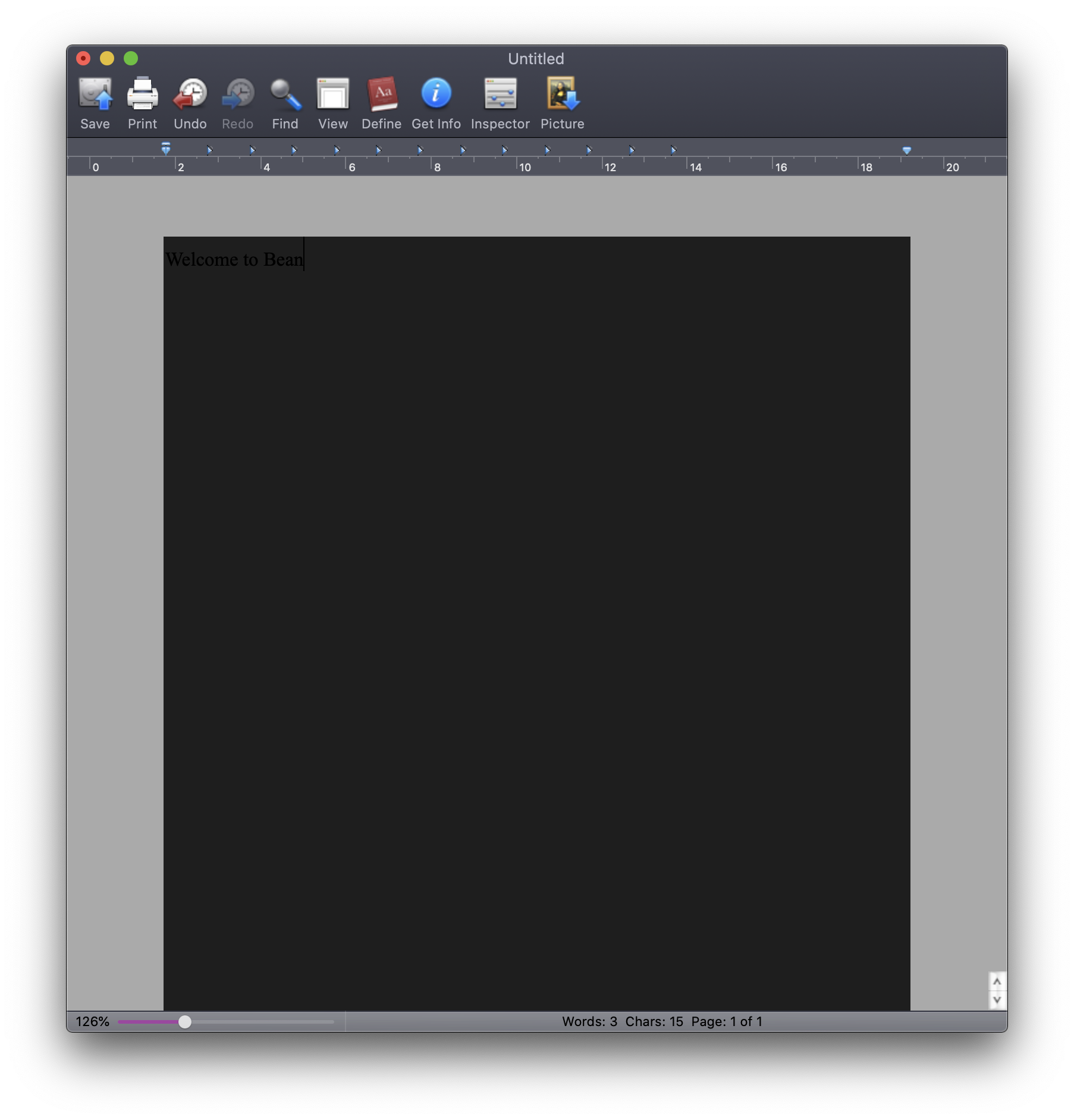

Dark mode wasn’t a thing in Tiger, but it is a thing now. Bean assumes that if it doesn’t set the background of a NSTextView, then it’ll be white. We’ll explicitly set that.

Actually ship it!

You can see the source on Github, and particularly how few changes are needed to make a 2006-era Cocoa application work on 2020-era MacOS, despite a couple of CPU family switches and a bunch of changes to the UI.